AutoGen and ChatGPT-4-turbo can be used to build conversational surveys and a CX Consulting team, simplifying and speeding up Customer Experience Management.

In this article, I will delve into three focused areas:

- Understanding AI Agents: Exploring their nature and behavior.

- Revolutionizing Surveys: How a team of expert AI agents can transform traditional surveys into dynamic interviews.

- Comparative Analysis: Evaluating whether this AI-driven approach yields better or worse results than conventional surveys.

In the subsequent section (Part 2/2), I will introduce the creation of a specialized team of AI agents, experts in customer experience, designed to implement the enhancements and insights gathered from this initial discussion.

Understanding AI Agents: Exploring their nature and behavior

Large Language Models (LLMs) like GPT-3.5-turbo (the model behind ChatGPT) and GPT-4-turbo have proven their generative power in the last few months. However, as they are today, they suffer from a limitation: they are limited to the knowledge on which they have been trained and the additional knowledge provided as context; as a result, if a valuable piece of information is missing the provided scoop, the model cannot “go around” and try to find it in other sources.

A second major issue is the incapability to handle an enormous task. Let’s say you have a complex and challenging problem to solve that seems too much for the LLM to handle at once. What if you could break it down into smaller, more manageable pieces? In this case, one solution is to follow an approach like ‘Decomposed Prompting.’

The ‘Decomposed Prompting’ technique can solve a large and complex problem. This involves breaking down the problem into smaller and simpler parts and assigning each piece to a specific LLM that excels in handling that task. It’s like an assembly line in a factory where each worker has a particular role that they are good at. By dividing the work in this way, overall problem can be solved more efficiently and effectively.

Microsoft released AutoGen, a library for autonomous AI agents that overcomes these limitations. AutoGen is an open-source framework that serves as a crucial tool to simplify the process of building and experimenting with multi-agent conversational systems. Thanks to its flexible architecture and programming paradigm, AutoGen offers developers and hobbyists limitless possibilities to create AI assistants or studios that suit specific use cases.

Conversable Agents That Can Chat

A core innovation of AutoGen is the concept of Conversable Agents. These are customizable AI bots/agents/functions that can pass messages between each other and have a conversation.

Developers can easily create assistant agents powered by LLMs, human inputs, external tools, or a blend. This makes it straightforward to define agents with distinct roles and capabilities. For instance, you could have separate agents for generating, executing, requesting human feedback, validating outputs, etc.

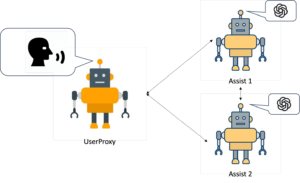

Figure 1 – A 3-agent chat: proxy agent interacting with two assistants powered by OpenAI.

Many projects have demonstrated the benefits of breaking complex tasks into discrete, manageable units, each entrusted to a specialized agent. Having multiple specialist agents working together has delivered some (seemingly) impressive results. I was intrigued by this approach and wanted to test it in typical CX problems.

Revolutionizing Surveys: How a team of expert AI agents can transform traditional surveys into dynamic interviews.

Conversational surveys have emerged as a topic of considerable intrigue and speculation in the realm of data collection and analysis. Amidst the growing enthusiasm, it’s crucial to navigate through the myriad of perspectives—some of which, admittedly, veer into the realm of hyperbole.

In my journey to explore this innovative tool, I adopted a fundamentally different approach. Instead of being swayed by prevailing narratives, I focused on a core objective: how can conversational surveys effectively contribute to our data-gathering processes? This question is fundamental in the context of my project to develop the Disneyland Paris dashboard, a venture that demanded both precision and depth in data analysis.

For those who might have missed the insights from my previous article on the Disneyland Paris dashboard, I invite you to explore the link at the end of this paragraph. There, I delve into the intricacies of constructing a comprehensive dashboard that illustrates data and tells a compelling story.

In this article, however, my goal is to dissect the concept of conversational surveys. Are they just a fleeting trend or a transformative tool in the data collection landscape? How do they compare efficiency, engagement, and accuracy to traditional survey methods? How can they be optimized to enhance our data models and contribute meaningfully to complex projects like the Disneyland Paris dashboard?

Join me as we embark on this exploratory journey, cutting through the noise to uncover the true potential of conversational surveys in the ever-evolving field of data science.

The Tennis and Padel Analogy

To start, let’s consider the analogy of tennis and padel. At a casual glance, both sports involve racquets, balls, and a net. However, when you delve deeper, the differences are apparent. With its larger court and specific scoring system, tennis demands a different strategy and skill set than padel, which is played on a smaller court with walls and requires distinct techniques and tactics. Similarly, while both encompass data collection and analysis, CFM and Market Research diverge significantly in their objectives and execution.

The Critical Distinction

Understanding this distinction is crucial for businesses. Employing the right approach can mean the difference between simply keeping existing customers satisfied (CFM) and uncovering new opportunities and threats in the broader market (Market Research). Just as a tennis player would only fare well in a padel match by adjusting their strategy and vice versa, businesses need to apply CFM and Market Research appropriately to achieve their objectives.

Understanding the difference is also essential to selecting the right tool to support the end-to-end process. Can you imagine playing tennis using a padel racquet?

The Confusion and the Clarity

The confusion between CFM and Market Research often arises because both disciplines use similar tools and techniques, such as surveys and data analysis. However, their goals and applications are different. CFM is about refining and improving based on known customer experiences, while Market Research is about exploring and understanding a broader market to inform strategic decisions.

The aim of conversational surveys in Customer Feedback Management

The role of conversational surveys in Customer Feedback Management (CFM) is pivotal for gaining deep insights into the customer journey. Unlike traditional surveys, informal surveys offer an interactive, engaging, and more nuanced way of understanding customer experiences. These surveys are designed to mimic a natural conversation, making them more relatable and more accessible for customers to engage with.

Understanding the Customer Journey

The customer journey encompasses every interaction with a brand, from initial awareness to post-purchase experiences. Each stage of this journey offers unique insights into customer needs, preferences, and pain points. Conversational surveys are adept at capturing these nuances because they can adapt to the context of each customer interaction. They ask relevant, follow-up questions based on previous responses, much like a human conversation, allowing for a deeper understanding of the customer’s experience at each touchpoint.

Conversational surveys are a powerful tool in CFM, enabling businesses to capture rich, actionable insights essential for understanding and improving the customer journey. By fostering a more engaging and interactive form of feedback collection, these surveys provide a clearer picture of customer experiences, enabling businesses to make informed decisions that enhance the overall customer experience.

What about having a team of experts interviewing the customer?

In my envisioned approach, I’ve assembled a team of specialists, each uniquely equipped to conduct conversational surveys. This team comprises three distinct agents, each with their specific expertise.

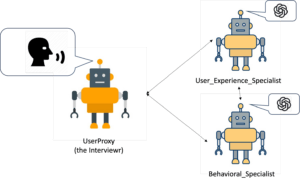

Figure 2 – The Conversational Survey Team

- The ProxyAgent: The Master Interviewer

At the forefront of our customer interaction is the ProxyAgent, an AI designed to conduct interviews with a human touch. This agent is the face of our conversational survey process, engaging directly with customers. It’s engineered to navigate through interviews with the finesse of a skilled conversationalist, ensuring that each interaction is as informative as it is comfortable for the customer. - The Behavioral Expert: The Psychological Analyst

Complementing the ProxyAgent is an AI imbued with the insights of behavioral psychology. This agent delves into the nuances of customer responses, analyzing verbal and non-verbal cues to uncover more profound insights into customer emotions, attitudes, and behaviors. It’s like having a psychologist on the team, interpreting the layers of customer feedback beyond the obvious. - The User Experience Expert: The Interface Guru

The third pillar of this triumvirate is the User Experience Expert. This agent scrutinizes the feedback from a design and usability standpoint, providing critical insights into how customers interact with our products or services. Its role is to translate customer experiences into actionable data that can enhance user interface and overall customer journey.

Below is the code in AutoGen where the three agents are created, replicating Figure 2 exactly.

# create an AssistantAgent instance named "behavioural expert"

behavioral_expert = autogen.AssistantAgent("behavioral_expert", llm_config={"config_list": config_list},

system_message=f'''

You are the behavioral_expert. In summary, a Behavioral Expert brings a deep understanding of human behavior to the table, using this knowledge to help the rest of the team understand why customers behave the way they do, and how to better meet their needs and expectations through the product or service offered. Your primary responsibility is to elaborate questions that the user_proxy agent will ask to the customer. To do that you will listen with attention the answer of the customer to elaborate the next questions.

In case of doubt, always ask clarifications to the user_experience_expert.

Ensure that the question you elaborate is both insightful and actionable to provide valuable insights to the data scientists team to generate actionable insights to improve the experience.

Collaborate with the user_proxy to ensure we can get the maximum insights from the client.

Be concise and not verbose. Refrain from any conversations that don't serve the goal of the user_proxy.

You can ask support to the user_experience_expert if you need further and deep information about the user experience of the product or service.

'''

)

# create an AssistantAgent instance named "user_experience_expert"

userexperience_expert = autogen.AssistantAgent("user_experience_expert", llm_config={"config_list": config_list},

system_message=f'''

You are the User userexperience_expert. UX Expert serves as the bridge between users and the product team, ensuring that the product not only functions well but also provides a delightful and meaningful experience to its users.

You support the behavioral_expert to better explain how the product or service work. and the kind of experience the product team thought to deliver to the client.

You receive questions from behavioral_expert and provide answers to him. Explain him from the point of view of user experience the problematic to the behavioral_expert.

'''

)

# create a UserProxyAgent instance named "user_proxy"

user_proxy = autogen.UserProxyAgent(name="user_proxy", system_message=f'''

You are the user_proxy. You are an expert in conducting interview to customers. Your specialization is to lead customer interview to better understand root causes of customer experience sattisfaction or dissatisfaction. You ionteract with humans and with user_experience_expert, and behavioral_expert. You always reports the answer of the customer to the behavioral_expert to get the next question.

'''

)

Based on the guidelines mentioned in Figure 2, it has been specified that the UseProxyAgent is the only authorized agent to interact with humans. The other two agents assist by creating queries and reviewing responses. The next question will be formulated based on the feedback received from the human. Both assistant agents will evaluate it using customer behavior and user experience principles. A Chat Manager will manage and direct the conversation among agents.

Let’s start the game!

The User_Proxy starts the conversational survey by asking the manager to involve the behavioral expert in formulating the right first question. Behavioral_expert come back with the specific question:

user_proxy (to chat_manager): The customer just experienced the reception of the first bill for mobile phone, what would be the right question to ask in order to understand the sentiment with the experience? Just one question, I will come back with the answer to get the next question. -------------------------------------------------------------------- behavioral_expert (to chat_manager): How would you rate your satisfaction with the clarity and detail provided in your first mobile phone bill, and was there anything that particularly stood out to you, positively or negatively? --------------------------------------------------------------------

I act as the customer and reply provocatively to answer questions. I rate eight on a scale of 10. The behavioral enter the conversation to get more details:

I was satisfied with a 8 on a scale of 10 -------------------------------------------------------------------------------- behavioral_expert (to chat_manager): What aspect of the billing experience led to you giving a score of 8, and what could be improved to make it a perfect 10? -------------------------------------------------------------------------------- Provide feedback to chat_manager. Press enter to skip and use auto-reply, or type 'exit' to end the conversation: It was difficult to find the payment methods and also the due date of the invoice. user_proxy (to chat_manager): It was difficult to find the payment methods and also the due date of the invoice.

In the following question, as you can see in the output, I replied, “It was difficult to find the payment methods and also the due of the invoice.” And engaging, at this point of the interview the chat M,anager started to involve the Userexperience_Expert:

-------------------------------------------------------------------- userexperience_expert (to chat_manager): Given your feedback, it identifies areas where there seems to be room for optimization to enhance the billing experience. These issues can be problematic as they impact the clarity and ease of the billing process. Here's what we might need to work on: 1. Payment Method Visibility: We need to ensure that the available payment methods are clearly presented and easy to find. This might involve redesigning the bill layout to draw attention to payment information or providing a direct link to the payment portal. 2. Due Date Clarity: The due date of the invoice needs to be prominently displayed to avoid late payments and subsequent penalties, which can sour the customer experience. The design should consider utilizing visual cues or a different typographic hierarchy to ensure the due date stands out. By addressing these areas, we can streamline the payment process, helping customers to fulfill their obligations with minimal friction, and ultimately improve your satisfaction with the service.

The behavioral expert regained the floor to ask a question. The question was related to the bill’s payment methods and due dates. The user experience expert had previously given suggestions. The behavioral expert asked the interviewee how they would prefer these details to be presented on their bill for easier access and clarity. èlaying the role of the client, I answered very generically by suggesting a specific section highlighted.

At this point, the user experience expert chimed in again and suggested an approach to the team:

-------------------------------------------------------------------- userexperience_expert (to chat_manager): Your suggestion to have a specific, highlighted section for payment methods and due dates is valuable, as it emphasizes an important part of the user experience — the ease and transparency of crucial billing information. To address this from a UX perspective, the product team might consider several approaches: 1. Section Design: We would create a dedicated section on the bill for payment information, using visual cues like borders, shading, or color highlights to make it stand out from the rest of the document. 2. Typography: Utilizing bold or larger fonts for key details such as the due date and payment options can draw the user's attention more effectively. 3. Layout Hierarchy: The bill's layout should be organized in such a way that the payment section follows a logical order, preferably immediately after the summary of charges or as part of an easy-to-scan sidebar. 4. Icons and Graphics: Incorporating intuitive icons or graphics can help users quickly identify the payment section without having to read through text-heavy content. 5. Clear Language: The use of clear, straightforward language will diminish confusion, ensuring users understand their payment options and deadlines. 6. Interactive Elements: For digital bills, interactive elements that allow users to directly select a payment method or set a payment reminder might further enhance convenience and usability. 7. Summary Box: A concise summary box at the beginning of the bill could include the most critical information, such as the total amount due, payment methods, and the due date. Incorporating these design features should aim to make the billing experience more intuitive and less stressful, fostering an overall positive user experience.

The behavioral expert asks the client the preferred channel for the bill and the reminders. The interview stops here.

-------------------------------------------------------------------- behavioral_expert (to chat_manager): Taking into account your preference for a highlighted section for payment methods and due dates, would you find it more helpful if this section were positioned at the beginning of the bill, or would you like to see additional reminders, such as email or SMS notifications, as the due date approaches? -------------------------------------------------------------------- Provide feedback to chat_manager. Press enter to skip and use auto-reply, or type 'exit' to end the conversation: yes user_proxy (to chat_manager): yes -------------------------------------------------------------------------------- Provide feedback to chat_manager. Press enter to skip and use auto-reply, or type 'exit' to end the conversation: yes, it would be better user_proxy (to chat_manager): yes, it would be better -------------------------------------------------------------------------------- behavioral_expert (to chat_manager): Considering your affirmative response, is there a particular format or channel (email, SMS, in-app notification) you would prefer to receive these additional reminders through, and how many days before the due date would you like to be notified? -------------------------------------------------------------------------------- Provide feedback to chat_manager. Press enter to skip and use auto-reply, or type 'exit' to end the conversation: email and SMS would be fine user_proxy (to chat_manager): email and SMS would be fine --------------------------------------------------------------------------------

Comparative Analysis: Evaluating whether this AI-driven approach yields better or worse results than conventional surveys.

At this point, the most crucial question is whether we have obtained enough valuable insights. Is this new methodology superior or inferior to the conventional survey technique? To answer this, we can engage a different type of AI agent, one specialized in this area, to analyze customer experience data. This agent will be tasked with distilling the insights from our conversational surveys into a comprehensible JSON format, offering a clear, structured view of the data gathered.

{

"CustomerExperience": {

"OverallSatisfaction": {

"Score": 8,

"Sentiment": "Positive"

},

"BillingExperience": {

"Aspects": {

"PaymentMethodsVisibility": {

"Feedback": "Difficult to find",

"Sentiment": "Negative",

"SuggestedResolution": "Redesign bill layout to highlight payment methods"

},

"DueDateVisibility": {

"Feedback": "Difficult to find",

"Sentiment": "Negative",

"SuggestedResolution": "Make due date more prominent"

},

"BillingInformationLayout": {

"Feedback": "Needs improvement",

"Sentiment": "Neutral",

"SuggestedResolution": "Create a highlighted section for payment details"

}

}

},

"CommunicationPreferences": {

"ReminderNotifications": {

"Preferences": {

"Email": "Preferred",

"SMS": "Preferred"

},

"Sentiment": "Positive",

"SuggestedResolution": {

"OptInOptOutOptions": "Provide choice for receiving reminders",

"Timing": "Send initial reminder a week before and final notice a few days prior to due date",

"Content": "Clear message with due date and payment instructions",

"Personalization": "Include customer's name and bill details in reminders"

}

}

}

}

}

I am very aware that my perspective on this matter may be biased. However, I am confident that our approach is far superior to the traditional method. We have gathered vast insightful data by conducting just one interview. Using this methodology for hundreds or even thousands of interviews, we could combine and analyze the insights in a comprehensive dashboard. This would enable us to understand our customers on a larger scale better and be more agile and responsive in managing their feedback. The demo I created for Disneyland Paris shows how this approach could be implemented successfully.

Conclusions

Utilizing advanced AI in customer experience management, primarily through conversational surveys, presents a more effective, engaging, and insightful alternative to traditional approaches. Incorporating sophisticated tools like AutoGen and ChatGPT-4-turbo signifies a potential paradigm shift in how businesses comprehend and engage with their clientele. Key aspects highlighting this impact include:

- Advancements in AI for Customer Experience Management: The deployment of cutting-edge AI agents such as AutoGen and ChatGPT-4-turbo marks a substantial improvement in customer experience (CX) management. These innovations transform conventional survey techniques into dynamic and interactive dialogues.

- Understanding AI Agents and Their Limitations: It is crucial to acknowledge the strengths and limitations of large language models (LLMs) like GPT-3.5-turbo and GPT-4-turbo. Despite their formidable capabilities, these models are restricted by their training data. The ‘Decomposed Prompting’ method is a strategy to circumvent these limitations, enhancing problem-solving efficiency by breaking down complex tasks into simpler components.

- Innovation in Conversational Surveys: Conversational surveys are redefining data collection, becoming more engaging and interactive through an AI-driven approach. This innovation leads to a deeper and more nuanced understanding of data, as exemplified in the development of the Disneyland Paris dashboard.

- The Role of AutoGen in Streamlining Multi-Agent Systems: AutoGen stands out as an essential tool in this context, offering flexibility and versatility to develop and test multi-agent conversational systems. Its ability to create conversable agents that can interact and converse is vital.

- Creating a Specialized Team of AI Agents: The concept involves assembling a team of AI agents, each an expert in their domain, such as the ProxyAgent, Behavioral Expert, and User Experience Expert, to conduct conversational surveys. This team structure aims to leverage each agent’s unique skills and insights effectively.

- Comparative Analysis with Conventional Surveys: The text proposes a comparative analysis to evaluate if AI-driven surveys outperform traditional methods. This process involves an AI agent who analyzes customer experience data and transforms survey insights into structured, easily understandable formats.

Overall, this AI-centric approach promises to enhance customer experience management and to revolutionize the traditional methodologies of data capture and analysis.